In the ever-evolving landscape of distributed systems, two paradigms dominate the conversation: Edge Computing and Cloud Computing. While both aim to process and manage data efficiently, they diverge in architecture, latency profiles, and ideal use cases. This post unpacks their core differences, trade-offs, and real-world applications, all through a techy lens.

What is Cloud Computing?

Cloud Computing centralizes data processing and storage in massive, remote data centers operated by providers like AWS, Azure, or Google Cloud. Think of it as a heavy weight server farm accessible over the internet, delivering scalable compute power, storage, and services on-demand.

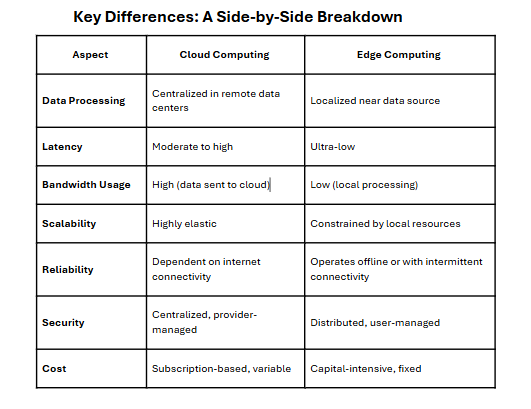

- Architecture: Centralized, with data traveling to and from distant servers.

- Latency: Higher due to network hops, typically 50-200ms round-trip depending on geography.

- Scalability: Near-infinite, with elastic resource allocation.

- Cost Model: Pay-as-you-go, often with egress bandwidth charges.

- Management: Provider-managed infrastructure, abstracting hardware complexity.

What is Edge Computing?

Edge Computing pushes processing closer to the data source—think IoT devices, local gateways, or on-premise servers. It’s about minimizing latency and bandwidth by handling compute tasks at the network’s periphery.

- Architecture: Decentralized, with compute nodes near or at the data origin.

- Latency: Ultra-low, often <10ms, critical for real-time applications.

- Scalability: Limited by local hardware, though hybrid models integrate with cloud.

- Cost Model: Upfront hardware investment, lower bandwidth costs.

- Management: Often user-managed, requiring local expertise.

Use Cases

Cloud Computing Use Cases

Cloud Computing thrives in scenarios demanding massive scale, centralized management, and flexible resource allocation. Its sweet spot includes:

- Big Data Analytics: Processing petabytes of data for machine learning models or business intelligence dashboards. Example: Running Spark clusters on AWS EMR to analyze customer behavior.

- Web Applications: Hosting scalable SaaS platforms like CRMs or e-commerce sites. Think Shopify or Salesforce, leveraging cloud elasticity for traffic spikes.

- Backup and Disaster Recovery: Storing redundant data across geo-distributed regions for compliance and resilience.

- DevOps Pipelines: CI/CD workflows on platforms like GitHub Actions or Jenkins, tapping cloud VMs for build and test environments.

The cloud’s centralized nature makes it ideal for workloads where latency isn’t mission-critical, and global accessibility is key.

Edge Computing Use Cases

Edge Computing dominates where low latency, local processing, or intermittent connectivity is non-negotiable. Its killer apps include:

- IoT and Smart Devices: Real-time data processing in smart homes or industrial sensors. Example: A factory’s edge gateway analyzing vibration data to predict equipment failure.

- Autonomous Vehicles: Split-second decision-making for navigation and obstacle avoidance, where cloud round-trips are too slow.

- Retail and Point-of-Sale: Local processing for inventory management or personalized promotions in stores, even during network outages.

- Telemedicine: Edge devices in remote clinics processing patient vitals for immediate diagnostics, minimizing reliance on spotty internet.

Edge excels in distributed, latency-sensitive environments, often complementing cloud for hybrid workflows.

Hybrid Models: The Best of Both Worlds

In practice, many deployments blend edge and cloud. Edge nodes handle real-time tasks, while the cloud aggregates data for long-term storage or heavy-duty analytics. For instance:

- Smart Cities: Edge devices process traffic camera feeds locally to optimize signals, while cloud systems analyze historical patterns for urban planning.

- Content Delivery Networks (CDNs): Edge servers cache video streams for low-latency delivery, with cloud backends managing global content distribution.

This hybrid approach balances immediacy with scalability, leveraging edge for speed and cloud for depth.

Trade-Offs and Considerations

Choosing between edge and cloud—or architecting a hybrid solution—hinges on your workload’s demands:

- Latency Requirements: If sub-10ms response times are critical (e.g., robotics), edge is non-negotiable.

- Data Volume: Massive datasets or archival needs favor the cloud’s storage scalability.

- Connectivity: Remote or unstable network environments lean toward edge’s offline capabilities.

- Budget: Cloud’s OPEX model suits variable workloads; edge’s CAPEX suits predictable, localized ones.

- Security: Cloud offers robust, provider-managed protections, while edge requires bespoke, user-driven security.

The Future: Convergence and Evolution

As 5G and satellite networks (like Starlink) shrink latency and boost connectivity, the lines between edge and cloud are blurring. Expect tighter integration, with edge nodes acting as cloud extensions, and frameworks like Kubernetes unifying orchestration across both. Emerging standards, such as Web Assembly for lightweight edge compute, will further bridge the gap.

Wrapping Up

Edge Computing and Cloud Computing aren’t rivals—they’re complementary tools in the modern tech stack. Cloud powers scalable, centralized workloads; edge delivers real-time, localized processing. By understanding their strengths and mapping them to your use case, you can architect systems that are both performant and cost-effective. Whether you’re building an IoT mesh, a global SaaS platform, or a hybrid smart grid, the choice between edge and cloud—or both—shapes the future of your infrastructure.

Got a project in mind?

Drop a comment!