If you have ever tried to get a bank loan, you know it usually involves a lot of paperwork, many phone calls, and days of waiting. But in 2026, things are changing fast in India thanks to something called ULI (Unified Lending Interface).

Think of ULI as “UPI for Loans.” Just like UPI made sending money as easy as scanning a QR code, ULI is making getting a loan as simple as a few clicks on your phone.

What is ULI?

ULI is a digital system created by the Reserve Bank of India (RBI). It acts like a bridge between a person who needs a loan and all the data the bank needs to see to approve that loan.

4 Ways ULI is Transforming Lending Software

1. Solving the Integration Challenge with Unified APIs

In the past, fintech solution providers struggled to connect their software to different government databases, land records, and banks. It was a slow and expensive process.

With ULI, that struggle is over. Instead of building hundreds of custom bridges, software providers now use a single “plug-and-play” connection. This allows your team to focus on building great products instead of fixing broken data pipes.

2. Underwriting with “Precision Intelligence”

Lending software is no longer just looking at a CIBIL score. 2026 has seen a shift toward alternative credit models powered by the “New Trinity”: JAM, UPI, and ULI.

- Beyond Financials: Software can now pull non-financial data points—like satellite-derived crop health, dairy-related insights, and utility payment history—with a single consent request.

- The Outcome: This has unlocked credit for “thin-file” borrowers (those without traditional bank history). By early 2026, ULI-enabled platforms have already facilitated hundreds of thousands of loans for tenant farmers and small-scale urban vendors.

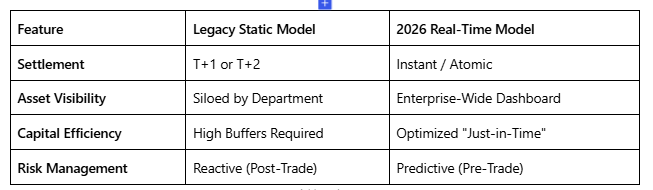

3. From “Days” to “Minutes”: The STP Revolution

Turnaround Time (TAT) used to be measured in business days. In 2026, the industry standard for ULI-compliant software is Straight-Through Processing (STP).

- Frictionless Flow: By automating identity verification (e-KYC), asset valuation (land records), and income assessment (GSTN), the software removes human bottlenecks.

- Volume Spike: Recent data shows that in a single month (April 2025), ULI facilitated over 1.4 million loans totaling ₹65,000 crore. This volume would have been physically impossible with legacy manual verification software.

4. Security as a Feature, Not a Burden

With the Digital Personal Data Protection (DPDP) Act now fully in force, compliance is the top priority for 2026.

- Consent-First Architecture: ULI-ready software doesn’t “scrape” data; it requests it. The system ensures that the lender only sees what the borrower allows.

- Auditability: Built-in digital trails mean that every piece of data used in a loan decision is verifiable, significantly reducing the “compliance debt” for banks and NBFCs.

Why 2026 is a Big Year

Last year, ULI was just a small test. Today, nearly 90 different banks and lenders are using it. It has expanded from just helping farmers to helping small businesses and regular people getting personal loans.

Summary: Why it Matters

The “Old Way” of lending was slow, expensive, and required too much paper. The “ULI Way” is:

- Fast: Get money when you need it.

- Fair: Based on real data, not just old credit scores.

- Simple: Everything happens on your smartphone.